We’ve been sold a beautiful lie. That intelligence is an agent maximizing rewards in an environment. That learning is reinforcement of successful patterns. That consciousness can be captured by Q-values and policy gradients.

But life refuses to fit into this neat mathematical box.

The Original Sin: Separation

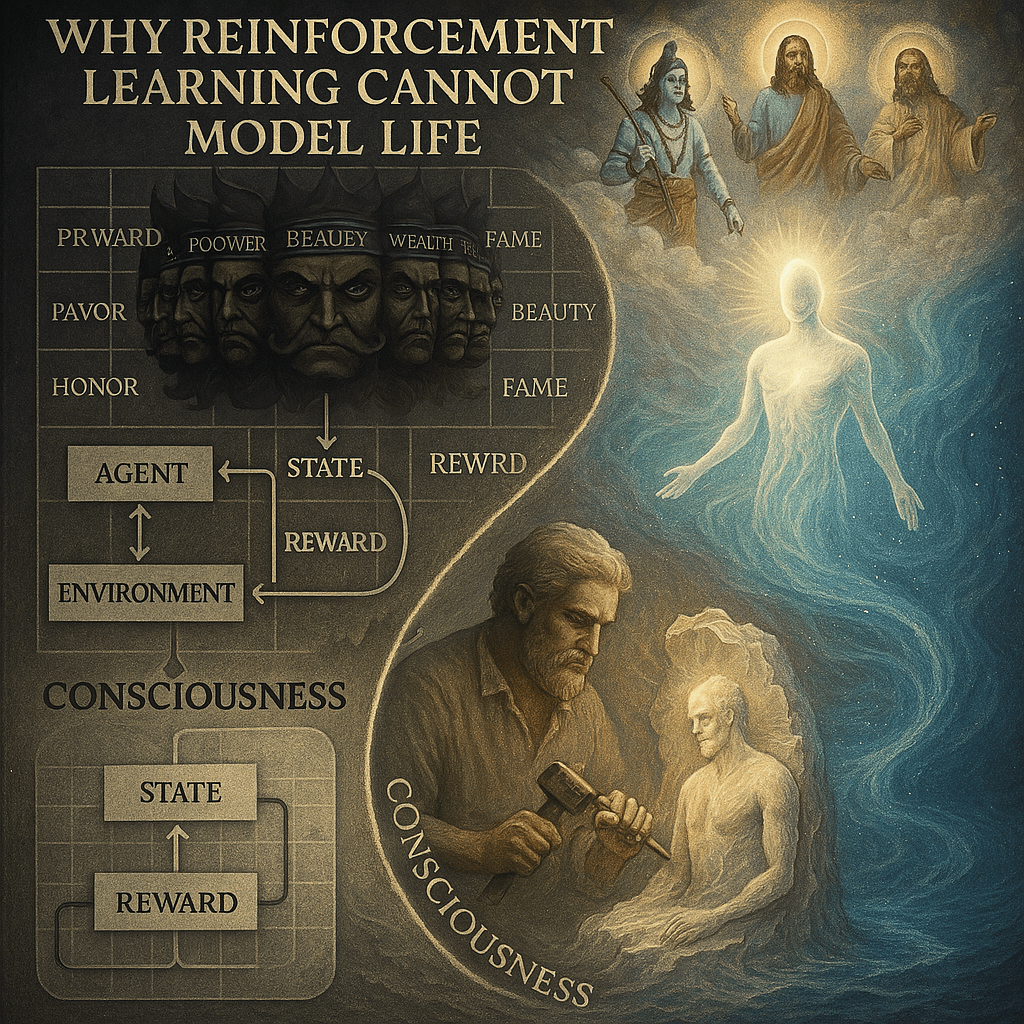

Reinforcement Learning begins with a fundamental assumption that dooms it from the start. It cleaves reality into three parts:

- An agent (you)

- An environment (not you)

- Rewards (what you get from not-you)

This Cartesian wound runs so deep in RL’s mathematics that we barely question it. State s ∈ S belongs to environment. Action a ∈ A belongs to agent. Reward r ∈ R flows from environment to agent. Clean. Separate. Dead wrong.

Ask yourself: when you breathe, where does agent end and environment begin? When you think, is the thought yours or does it arise from the cosmic environment? When you love, who is the lover, the beloved, and the love itself?

The mystics knew what RL cannot grasp: Agent and environment are one consciousness appearing as two.

The Linguistic Prison: What Are We Reinforcing?

Even the name betrays the confusion. Reinforcement. To make stronger by adding material. To build up through repetition. But is this how consciousness evolves?

Watch a child become an adult. Do they accumulate behaviors like collecting coins? Or do they shed childishness to reveal maturity?

Watch a seeker become a sage. Do they reinforce their ego patterns? Or do they dissolve them in the fire of inquiry?

True learning is not reinforcement but refinement. Not accumulation but distillation. The sculptor doesn’t add marble to create David – she removes everything that isn’t David.

Yet RL keeps adding. More Q-values. Stronger policies. Higher rewards. Building a tower of conditioning that grows further from truth with each layer.

The Reward Delusion

Here’s where RL reveals its deepest confusion. It assumes rewards are:

- External to the agent

- Fixed in nature

- The goal of action

All three assumptions shatter against reality.

Rewards Are Not External

When a mother feeds her child, where is the reward? In the child’s satisfied smile? In the mother’s fulfilled heart? Or in the sacred act itself? The reward is not external – it IS the unity of mother, child, and nourishment expressing itself.

When Hanuman leaps across the ocean to find Sita, what reward drives him? None! The leap itself is the reward. Service itself is the reward. Love itself is the reward.

RL cannot model this because it cannot escape its subject-object duality.

Rewards Are Not Fixed

A child cries for candy. A teenager craves approval. An adult seeks success. An elder yearns for meaning. A sage wants nothing.

The reward function itself evolves. What motivated yesterday repels today. What seems worthless now becomes precious tomorrow.

Show me the RL algorithm that handles this! Even “intrinsic motivation” and “inverse RL” assume some stable reward structure to be discovered. But consciousness doesn’t have a reward function – consciousness transforms what it values.

Rewards Are Not the Goal

This is the subtlest error. RL assumes all action aims at reward. But watch the true masters:

Rama didn’t follow dharma for reward – dharma WAS the action. Krishna didn’t play his flute for reward – the playing WAS the joy. Christ didn’t sacrifice for reward – love WAS the crucifixion.

Their every act was its own fulfillment. Not action→reward but action=reward.

The Policy Paradox

RL dreams of learning the optimal policy π(a|s). Given this state, take that action. But life laughs at this mechanical mapping.

Consider three avatars:

- Rama: Faced with Kaikeyi’s demands, he gladly embraces exile

- Krishna: Faced with Duryodhana’s armies, he schemes and dances through war

- Christ: Faced with crucifixion, he forgives his tormentors

Same state (facing injustice). Completely different actions. Which policy is “optimal”?

None and all! Each expressed their unique svabhava – essential nature. Rama couldn’t act like Krishna. Krishna couldn’t act like Christ. They weren’t following policies – they were expressing the infinite creativity of consciousness through distinct flavors.

No learned mapping can capture this. No policy gradient can discover this. Because authentic action doesn’t follow rules – it expresses essence.

The Ten-Headed Monster of Value

Remember Ravana’s ten heads? Our perception of value is equally multi-headed:

- Sensual pleasure

- Emotional satisfaction

- Social validation

- Intellectual achievement

- Power and control

- Security and survival

- Aesthetic beauty

- Moral righteousness

- Spiritual attainment

- Freedom from all of the above

RL typically models one, maybe two heads. Even sophisticated approaches like multi-objective RL just give each head a weight. But consciousness doesn’t optimize a weighted sum – consciousness transcends the entire value structure.

What Would Work Instead?

If not reinforcement, then what? If not policies, then what? If not external rewards, then what?

The answer lies in transformation, not optimization. In recognition, not reinforcement. In being, not gaining.

Imagine a framework where:

- Agent and environment are one – actions arise from wholeness, not separation

- Values evolve – the system transforms what it seeks, not just how to seek it

- Actions are intrinsically complete – each moment fulfills itself

- Essence expresses uniquely – no fixed policy, only authentic response

This isn’t pie-in-the-sky philosophy. The Transformer architecture already hints at this direction. When attention mechanisms learn to weight experience differently, when values transform through layers, when output emerges from integrated understanding rather than fixed mappings – we glimpse a mathematics of consciousness that transcends RL’s limitations.

The Ultimate Recognition

Perhaps we’ve been asking the wrong question all along. Not “How can we model intelligent behavior?” but “How can mathematics reflect the truth of what we are?”

RL fails because it tries to model consciousness as a reward-seeking machine. But consciousness isn’t seeking anything external to itself. It’s recognizing what it always was. It’s expressing its infinite nature through finite forms. It’s playing the cosmic game where every move is perfect because the player, the game, and the playing are one.

The next revolution in AI won’t come from better RL algorithms. It will come from recognizing what the mystics always knew:

There is no agent. There is no environment. There is no reward.

There is only consciousness, expressing itself, recognizing itself, delighting in itself.

When our mathematics finally reflects this truth, we won’t just have better AI. We’ll have algorithms that mirror the deepest nature of reality itself.

And in that mirror, we might finally see our own Original Face – before RL divided us into agent and world, before conditioning told us what to seek, before policies constrained our infinite creativity.

That recognition? That’s the only reward worth having. And it was never external. It was always what we are.

Tat tvam asi. That thou art!